Cut through the noise: Learn about Sandbox noise and simulate its impact on your reports

If you’re in the business of running ads, you’ve likely come across the buzz around Privacy Sandbox. First announced by Google in 2022, it’s been making waves in the industry since then. Privacy Sandbox introduces a suite of APIs for targeting, retargeting, and attribution, but today, let’s zero in on the Attribution API and explore what advertisers can expect from the reports it generates.

Before we dive into the Privacy Sandbox, let’s rewind back to April 2021, when Apple released iOS 14.5, and we all had to get familiar with SKAdNetwork (SKAN). Up until that point, we had the luxury of measuring just about everything for as long as we wanted. Then SKAN came along, and, well, you know the rest.

A major change Apple introduced with SKAN was the concept of privacy thresholds (or Crowd Anonymity)—basically, the idea that if your data doesn’t meet a certain threshold, you’re left with null values in your reports. This was a major shift, as it forced advertisers to rethink their approach to measurement.

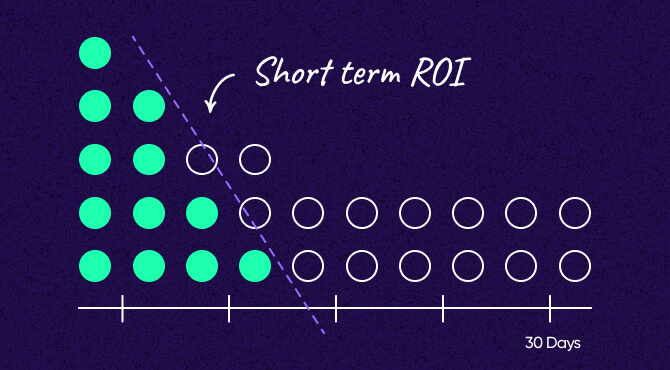

Now, while Sandbox gives you a full 30 days of measurement (compared to SKAN’s 3 postbacks spread across fixed windows of 2, 7, and 35 days), it also has its own limits on the amount of data you can actually receive—and that’s where noise comes into play.

But hold on—what exactly is noise?

In the context of Privacy Sandbox, noise refers to the deliberate introduction of random data into reports to help protect user privacy. By adding this “noise,” the system ensures that individual user actions can’t be easily traced back to them, making it harder for anyone to pinpoint specific behaviors.

For advertisers, though, this means the data you get is a bit fuzzier, which can make it trickier to draw precise conclusions from your reports. The trade-off is better privacy for users, but it also means you’ll need to adapt your strategies to work with slightly noisier data.

The more detailed the attribution report, the more noise gets added. This noise can be either additive—where random data is added to inflate numbers—or subtractive—where real data is reduced to deflate numbers.

When you have a large amount of data, the impact of noise tends to be minimal, so the data you see is fairly accurate. But as the amount of data decreases or becomes more granular, the level of noise increases, making it harder to draw precise conclusions. Noise can even appear in metrics with 0 conversions, creating the illusion of activity when there’s none, which adds another layer of complexity.

How can I manage noise in my reports?

As I mentioned before, the more detailed the data, the louder the noise. But do marketers actually have any say in how much noise gets added to their reports?

While you can’t eliminate noise completely, you do have some control over the amount of data you receive for each event you measure. This is where the contribution budget steps in, giving you the ability to manage how much data is allocated to each event.

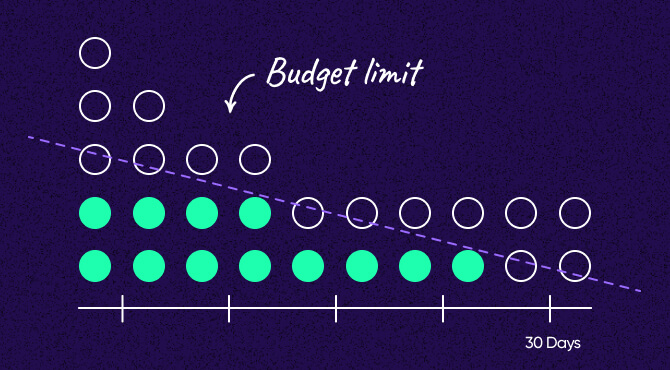

In the Privacy Sandbox, every source event, like an ad click, has a cap on the number of “values” it can use, known as the contribution budget. This budget is set at 65,536 values—an oddly specific number, but hey, someone did the math! It’s there to limit how much data can be collected from each source event.

The contribution budget in Sandbox is like having access to a 30-day open buffet—you can sample any dish, anytime you want. But there’s a catch: you have a calorie budget. Each event, like a purchase or registration, is a dish at the buffet. You can collect data from these events, but only up to a certain limit. Once you’ve reached that limit, you’re done collecting data for that event, just like when you hit your calorie cap at the buffet. This approach helps you gather meaningful insights without overindulging, ensuring user privacy stays intact.

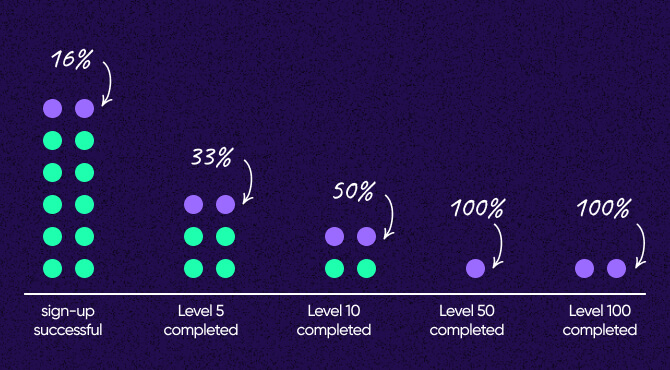

The way you allocate your contribution budget plays a big role in how much noise affects your reports. If you focus on key measurements and have a lot of data to work with, the noise has less impact—it gets spread out across the larger dataset. This works well for large-scale apps where the data volume helps keep things accurate, even when you’re measuring a lot.

But if you try to measure too many details with a smaller data set, especially in smaller-scale apps, noise becomes a bigger problem. With less data, noise has more room to distort your insights. So, whether you’re running a large-scale app or a smaller one, it’s important to manage your budget wisely to keep noise in check and your data as accurate as possible.

Let’s break it down with a simple example. Say you’re measuring short-term ROI, like revenue within the first 24 hours or by day 7. You might decide to stop measuring at $100 instead of $1,000. By keeping your measurement more focused, you’re looking at fewer purchases, which reduces the impact of noise and gives you clearer insights. But if you push that limit up to $1,000, you’ll be measuring a wider range of purchase amounts. This introduces more noise, which spreads across all those different amounts and could make it harder to see what’s really happening with your data.

Now, think about subscription apps. Before SKAN, offering a 14- to 30-day free trial was the norm for converting users to paid subscriptions. However, SKAN’s short measurement window made it tough to measure these conversions, forcing developers to rely on early indicators within the first 24 to 48 hours. With Privacy Sandbox, developers can focus on later signals, allowing them to return to longer trial periods and measure conversions more accurately by prioritizing what really matters for long-term success.

Another critical factor is how you segment your campaigns. The more you break down your data by factors like campaign, ad set, or creative, the more your contribution budget gets stretched. Each segment eats up part of your budget, which means the more detailed your segmentation, the more noise you’re likely to encounter.

Scaling up to cut down on noise

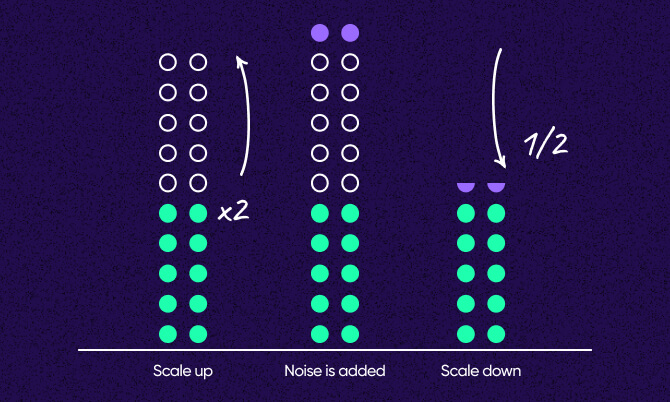

Another way of reducing the impact of noise is through data scaling. Scaling is a technique used to ensure that noise doesn’t distort important data points. By adjusting the values in your data, MMPs like AppsFlyer can amplify key signals, making them less susceptible to the noise that’s added to protect privacy.

For example, if your raw data shows a small number of user actions, like just a few sign-ups, scaling up increases these values, making them more robust against the noise. After the noise is added, the data is then scaled back down to more accurately reflect the true numbers. This process ensures that even after noise is introduced, your data remains clear and useful.

With scaling, we enable you to maintain the accuracy of your reports, ensuring that the important insights are preserved while still adhering to privacy requirements. This complements the strategic management of your contribution budget, helping you get the most out of the data you collect.

Key takeaways

When working within the Privacy Sandbox, there are a few things you’ll want to keep in mind to keep your data clear and actionable:

- Number of concurrent campaigns: Running too many campaigns at once can quickly spread your contribution budget too thin, leading to more noise and less reliable data. It’s all about finding the right balance to get meaningful insights without overextending yourself.

- Campaign breakdown: The more detailed your segmentation, the more noise you’re going to deal with. Focus on the breakdowns that give you the most bang for your buck.

- Number of events: Trying to measure too many events can drain your budget fast. Focus on the key metrics that align with your goals, whether you’re looking for early signals or long-term outcomes, to keep your data quality in check.

- Report frequency: Pulling reports too often can increase noise because each time you generate a report, a new layer of noise is added to protect privacy. If you generate reports frequently, the repeated introduction of noise can make it harder to get clear insights, especially if your dataset is small. It’s important to strike the right balance—getting the data you need without letting too much noise make your reports less accurate.

By keeping these factors in mind you can fine-tune your strategy to cut down on noise and get the most value out of your data.

If you want to see firsthand how noise will impact your reports, we’ve got just the tool for you. Check out our noise simulator, where you can explore different scenarios and see how your data might look with varying levels of noise.