The force of AI in ad fraud: fighting innovation with innovation

The famous FORCE so brilliantly depicted in Star Wars is like AI.

The advancement of Artificial Intelligence is delivering amazing benefits in so many areas. But just like the FORCE, AI is also exploited by a dark side in ways that are harmful to people and businesses, particularly in ad fraud.

On the one hand, AI can help detect fraud with remarkable precision. Yet at the same time, it gives fraudsters the ability to orchestrate sophisticated scams, putting billions of ad budget dollars at risk.

The pressing question is: can AI eradicate ad fraud, or is it fueling the problem?

By understanding that AI is a double-edged sword, advertisers can turn it into their greatest defense against evolving threats. With the right strategies, businesses can embrace AI not just as a tool for optimization but as a robust shield against fraud.

The growing threat of AI-driven ad fraud

Ad fraud isn’t new of course, but AI has taken it to new levels of sophistication. Bad actors now use advanced practices to create fake traffic, hijack devices, and mimic human behavior with precision — making them much harder to detect. These methods cost advertisers billions each year, draining budgets and eroding trust.

In broad terms, ad fraud refers to deceptive practices that manipulate ad systems to divert spending. Common forms include:

- Fake traffic: Bots imitating humans to inflate impressions or clicks.

- Botnets: Networks of hijacked devices orchestrating fraudulent activities on a massive scale.

- Click fraud: Artificially boosting click-through rates, often to exhaust a competitor’s ad budget or generate illicit revenue.

- Fake users: Fraudsters creating realistic profiles that mimic real user engagement.

Why AI is making ad fraud worse

AI has become a catalyst for ad fraud, giving fraudsters more sophisticated, scalable, and effective tools. Open-source AI platforms have lowered the barrier to entry, enabling bad actors to deploy advanced fraud schemes with minimal effort.

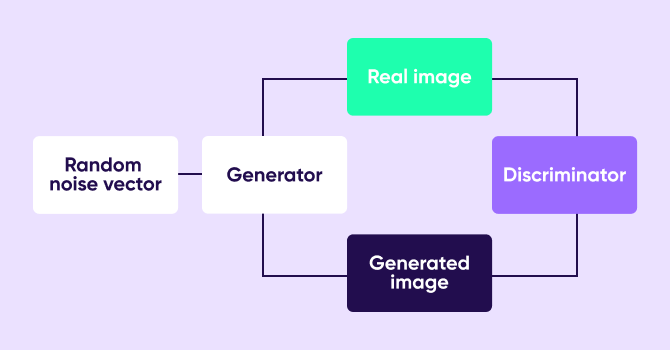

Fraudsters use Generative Adversarial Networks (GANs) to create synthetic content, including deepfake ads and fake users that interact with real campaigns. These AI-generated interactions can convincingly mimic human behavior, making them difficult to detect and flag. For example, fraudsters leverage GANs to generate fake user profiles that seamlessly engage with ads, tricking analytics tools into recording fraudulent engagement as authentic.

AI is also enhancing click farms, making them more difficult to detect. AI-driven algorithms can simulate diverse user behaviors, such as scrolling, dwell time, and varied click patterns, making fraudulent engagement look increasingly realistic. Additionally, AI is making it harder for Click-To-Install-Time (CTIT) detection, improving timing, randomness, and precision in mimicking real users with non-existent flows.

The financial impact is staggering. Statista predicts ad fraud losses will climb from $84 billion in 2023 to as much as $172 billion by 2028. With the accessibility of AI tools, fraudsters are becoming more sophisticated and widespread.

App marketing is also plagued by ad fraud. According to AppsFlyer estimates, financial exposure to app install ad fraud eclipsed $17 billion in 2024 (this refers to the amount of money that would have been lost to fraud had there not been any fraud detection; in reality, much of this is blocked and therefore not paid for).

Consider CycloneBot, a scheme targeting Connected TV (CTV) platforms. Using AI, it inflates viewing sessions and traffic, costing advertisers millions monthly. Other examples include BeatSting, an audio ad fraud scheme that generates fake audio traffic, siphoning over $1 million per month from advertisers. As well as FM scams, an additional audio scheme where fraudsters blend fake audio traffic that appears to be legitimate user activity across various devices and audio players.

These fraudulent interactions distort engagement metrics and mislead advertisers into believing they are reaching real audiences. These cases highlight how fraudsters are leveraging AI to scale their operations and evade detection.

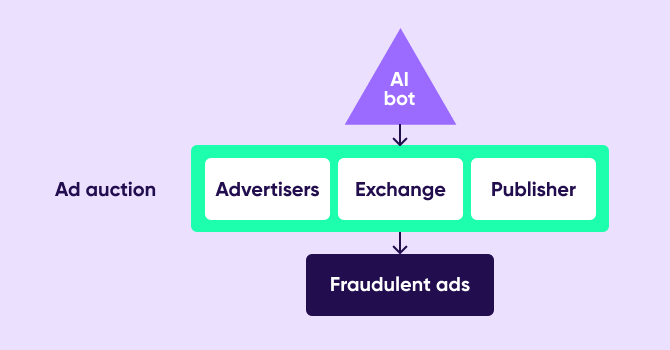

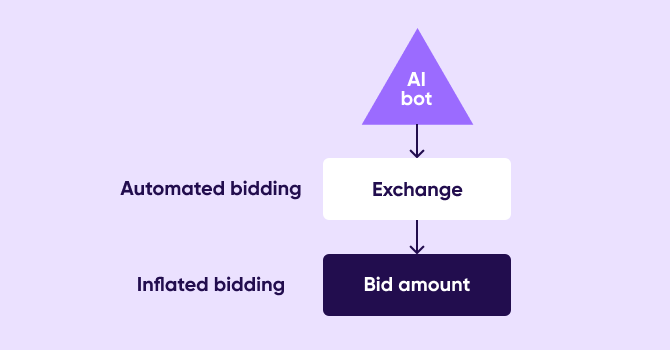

Scalper bots have also infiltrated digital campaigns. AI tools are being used to automate the bidding for digital ad placements, artificially inflating costs and leading to wasted ad spend. These AI-powered fraud schemes execute complex multi-click patterns, targeting high-value programmatic campaigns and making detection increasingly challenging

…and there are additional schemes being enacted which none of us have yet to uncover, as fraudsters continue to innovate, leveraging AI in diverse ways to exploit vulnerabilities across the digital advertising ecosystem.

Fighting fire with fire: how AI can combat ad fraud

While AI enables more sophisticated fraud, it’s also the most powerful tool to fight it. Advanced machine learning models and predictive analytics help advertisers to detect and block fraudulent activities in real time, often before damage is done.

AI solutions excel in several areas when combating ad fraud:

- Anomaly detection: Algorithms monitor traffic and flag unusual patterns, such as sudden spikes or inconsistent behaviors.

- Continuous learning: By analyzing new data, AI evolves to detect emerging fraud tactics and stay ahead of fraudsters.

- Enhanced accuracy: AI-powered systems distinguish legitimate user activity from fraudulent behavior with high precision, reducing false positives.

Success stories

AppsFlyer AI-powered tools prevent billions of dollars in fraudulent transactions by adapting to new threats and providing real-time fraud prevention. Similarly, other solutions employ predictive analytics to anticipate fraud trends, using graph-based methods to detect fraudulent networks and connections.

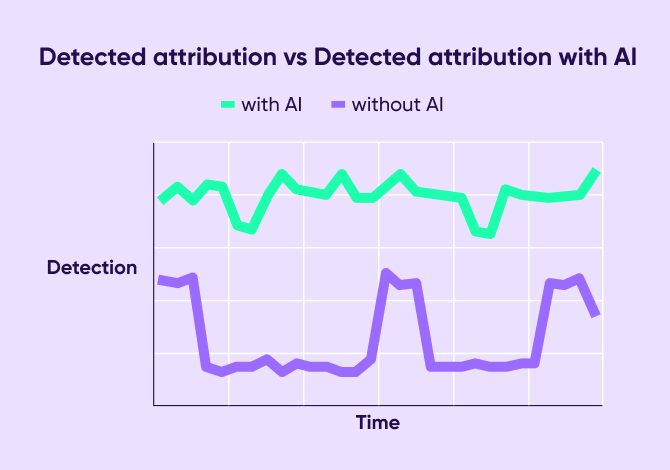

Our AI enhances detection, speed, as well as actual deterrence of ad fraud with key benefits including:

- Faster fraud detection: Identifies fraudulent activity up to 8X faster, helping businesses avoid substantial financial losses and data inaccuracies.

- Improved deterrence: Fraud attempts are caught and mitigated 14X faster, significantly reducing the window for fraudsters to exploit new bypasses and loopholes.

- Greater efficacy: Maintains over 90% fraud detection efficacy even after a fraud bypass, with an average of just 9% decline in detection accuracy.

- Enhanced accuracy: Ensures a 7X improvement in detection accuracy.

- Real-time detection: Identifies up to 60% more post-attribution fraud in real-time, reducing the number of fake users/installs immensely.

Challenges in using AI to combat ad fraud

Despite its effectiveness, AI is not a silver bullet. Fraud detection systems often rely heavily on historical data, which can make it difficult to identify completely new fraud tactics. This creates a constant cat-and-mouse dynamic—fraudsters adapt as quickly as detection improves.

Another challenge is explainability. AI-driven fraud detection systems can sometimes produce results that are difficult to interpret, making it harder for advertisers to understand why certain activities are flagged as fraudulent. Ensuring transparency and interpretability remains a crucial factor for AI adoption in fraud prevention.

Privacy and ethical concerns further complicate matters. AI fraud prevention tools must comply with global data protection regulations such as GDPR while still maintaining the effectiveness needed to combat sophisticated fraud tactics. Striking the right balance between user privacy and fraud detection remains an ongoing challenge.

Turning AI into your ad fraud ally

To stay ahead of fraud, organizations need a comprehensive and proactive approach. AI alone isn’t enough—it must be combined with human expertise and strategic collaboration to be truly effective. Here are the steps businesses should take:

- Invest in advanced AI solutions: Leading tools offer advanced capabilities for fraud detection and prevention.

- Blend AI and human expertise: Analysts play a vital role in refining AI outputs, interpreting nuanced patterns, and addressing edge cases that automated systems may miss.

- Collaborate across platforms: Sharing intelligence with peers and industry stakeholders strengthens collective defenses against sophisticated fraud schemes.

- Stay adaptive: Regularly update detection models with new data to counteract evolving fraud tactics.

Future-proofing strategies

Fighting ad fraud requires businesses to think ahead. Federated learning, for instance, enables organizations to collaborate on fraud detection without sharing raw data, ensuring privacy while enhancing results.

Additionally, fostering a culture of innovation and experimentation—through cross-functional teams and partnerships with technology leaders—helps organizations remain agile and proactive. Building industry alliances can further bolster defenses by pooling insights and resources.

AI innovation has proven to be a great advancement for businesses, especially in recent years, helping many enhance their capabilities, offering, services, and beyond.

But it is a double-edged sword which can be used for harm just as for good; therefore, businesses must be aware of the pros and cons of such powerful technology, and continue to contribute, innovate, and utilize it to combat ad fraud in order to stay ahead of fraud sophistication.

Key takeaways

AI is both a risk and a solution. Fraudsters exploit it for sophisticated scams, but it also powers the most effective fraud detection tools.

- Ad fraud is growing. Losses are projected to reach $172 billion by 2028, making proactive fraud prevention more critical than ever.

- AI-driven fraud detection works. Solutions like anomaly detection and predictive modeling are key to combating evolving fraud tactics.

- Human expertise is still essential. AI alone isn’t enough—expert oversight ensures more accurate and adaptive fraud prevention.

- Collaboration and innovation are key. Industry-wide cooperation and continuous technological advancements are the best ways to stay ahead of fraudsters.